An Overview of the scikit-learn Clustering Package

The second episode of the scikit-learn series, which explains the well-known Python Library for Machine Learning

Clustering is an unsupervised Machine Learning technique, where there is neither a training set nor predefined classes. Clustering is used when there are many records, which should be grouped according to similarity criteria, such as distance.

A clustering algorithm takes a dataset as input and returns a list of labels as output, corresponding to the associated clusters.

Cluster analysis is an iterative process where, at each step, the current iteration is evaluated and used to feedback into changes to the algorithm in the next iteration, until the desired result is obtained.

The scikit-learn library provides a subpackage, called sklearn.cluster, which provides the most common clustering algorithms.

In this article, I describe:

class and functions provided by

sklearn.clustertuning parameters

evaluation metrics for clustering algorithms

1 Class and Functions

The sklearn.cluster subpackage defines two ways to apply a clustering algorithm: classes and functions.

1.1 Class

In the class strategy, you should create an instance of the desired clustering class algorithm, by also specifying the class parameters. Then you fit the algorithm with data and, finally, you can use the fitted model to predict clusters:

from sklearn.cluster import AffinityPropagationmodel = AffinityPropagation()

model.fit(X)

labels = model.predict(X)1.2 Functions

In addition to the class definition, Scikit-learn provides functions to perform the model fitting. With respect to classes, functions can be used when there is a single dataset, which must be analyzed just once, in a single spot.

In this case, it is sufficient to call the function, in order to get clusterized data:

from sklearn.cluster import affinity_propagationresult = affinity_propagatiom(X)2 Tuning Parameters

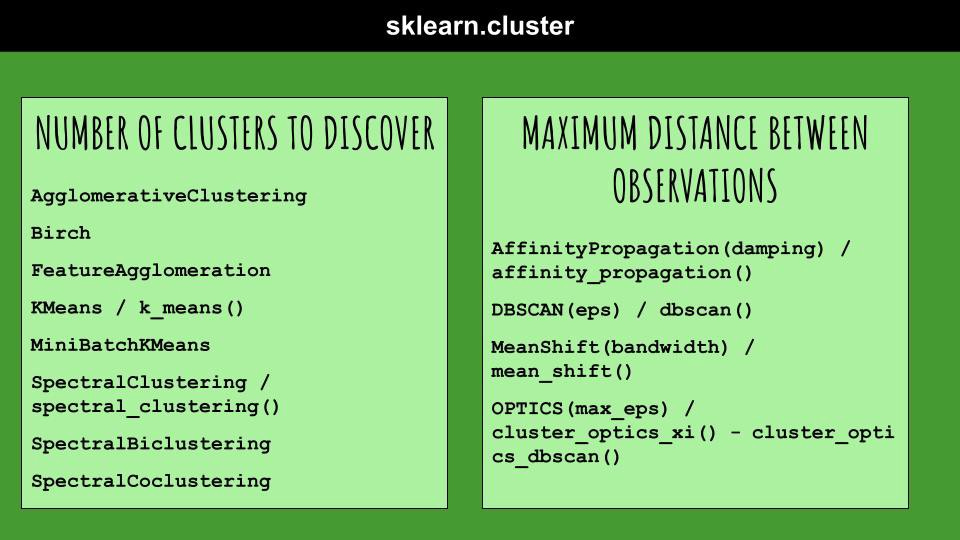

Clustering algorithms can be split into two big families, depending on the main parameter to be tuned:

the number of clusters to discover in the data

minimum distance between observations.

2.1 Number of Clusters to Discover

Usually, in this group of clustering algorithms, you should tune at least the maximum number of clusters to find. In scikit-learn, often this parameter is called n_clusters.

The sklearn.cluster package provides the following clustering algorithms belonging to this category (both the class and the function is shown for each provided algorithm):

KMeans/k_means()SpectralClustering/spectral_clustering()

For this category of algorithms, the main issue involves finding the best number of clusters. Different approaches can be used, such as the Elbow Method.

Keep reading with a 7-day free trial

Subscribe to Tips & Tricks for Data Science to keep reading this post and get 7 days of free access to the full post archives.